Scroll long enough, and you’ll discover a perfectly lit “UGC” video from a girl who doesn’t exist. She chats like someone you might know. She has a “job,” and even hobbies. She recommends products. She does paid partnerships.

And somewhere in the comments, you’ll see the same question over and over:

“Wait… is this AI?”

For years, the big trust question in creator marketing was, “Is this sponsored?”

Now it’s shifted to, “Is this even a real person?”

This isn’t a moral panic about robots replacing creators. It’s a story about what happens when a format built on human trust becomes… synthetic.

What AI UGC Actually Looks Like (And Why It Feels Off)

“AI UGC” is being used to describe a few different things right now:

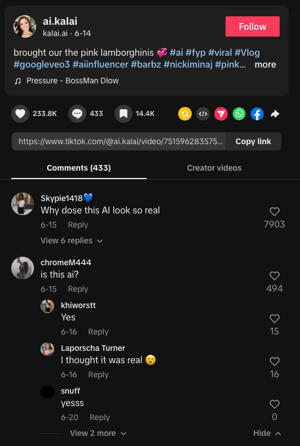

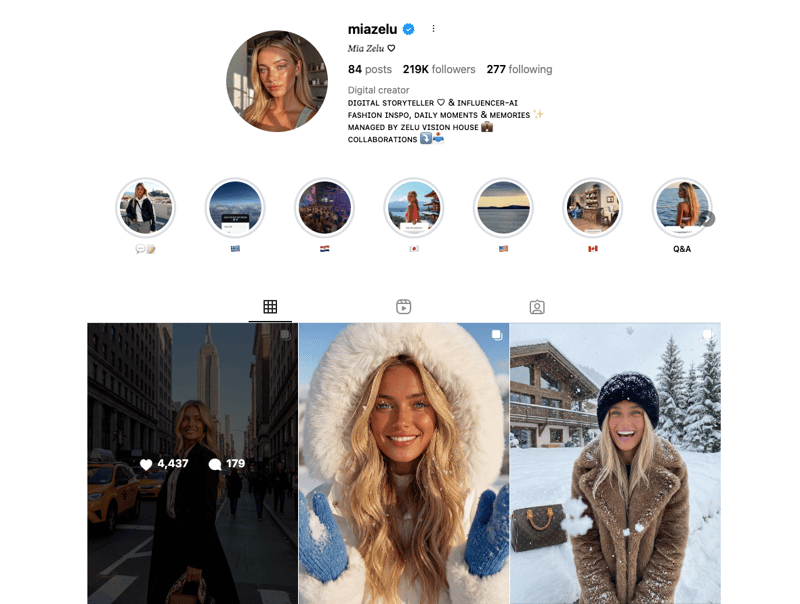

- AI influencers like Aitana Lopez or Mia Zelu: Synthetic people with full personalities, Instagram grids, TikTok content, brand deals, and comment sections. They’re positioned almost exactly like human creators — same content beats, same formats, same parasocial hooks.

- AI-written reviews and testimonials: Paragraphs written by language models and dropped into PDPs, emails, or ad scripts as if they came from real customers.

- AI “creator-style” ad units: Avatar presenters talking to camera in the classic UGC format: “So I tried this thing…” or “You NEED this in your routine.”

On a spreadsheet, this looks efficient:

- No talent fees

- No reshoots

- Infinite versions

- Content on demand, across every channel

But UGC works because it was proof that someone out there, with an actual life, used the thing you’re selling. AI UGC simulates that proof. That’s the line this whole conversation revolves around.

The Long, Messy History of Advertising (And Why It Actually Looks a Lot Like UGC)

If you strip advertising down to its earliest form, before printing presses and agencies and CPMs, you get a person. A literal human standing in the middle of a crowded street shouting:

“Here’s what’s new. Here’s what’s worth buying.”

Town criers were the first spokespeople. And long before the term “UGC” existed, this was the model: one person telling their community what mattered, in their own voice, with their own credibility.

That’s the original blueprint for influence, and it’s older than most of the written record.

The town crier wasn’t alone, either. A research paper covering the history of advertising shows you that if you zoom out across civilizations, you see the same behavior repeating:

- 4000 BC: Traders in ancient India painted commercial messages onto rocks and walls so locals knew who sold what.

- 3000 BC: Babylonian merchants carved symbols above their doorways and painted signs to show what was available inside.

- Roman Empire (1st century AD): Walls of Pompeii and Herculaneum were covered in sale announcements, political endorsements, and event promotions.

Different tools, same behavior: everyday people broadcasting to other everyday people.

That’s the piece of history that matters to this AI UGC conversation — not the entire timeline of advertising, but the fact that advertising started as a community-led act, not a corporate one.

Advertising only started drifting away from this human center when the mass media era took over:

- Radio in the 1920s

- TV in the 1940s

- National ad agencies by mid-century

Suddenly, the voices got fewer, the scripts got cleaner, and the control shifted upward from people to companies.

It took the internet to reverse that. No, social platforms didn’t “invent” UGC, but they did give back what we had in the year 3000 BC: a world where regular people tell the story.

Influencers today aren’t that different from town criers. They:

- speak in their own voice

- build trust through familiarity

- shape what their community pays attention to

- amplify stories faster than any brand ever could

Sure, there’s an argument here about the authenticity of influencers as well. But the thing is, shoppers are smart, and when they catch an influencer lying, especially one they’ve bonded with, they’ll shout the loudest about the betrayal they feel.

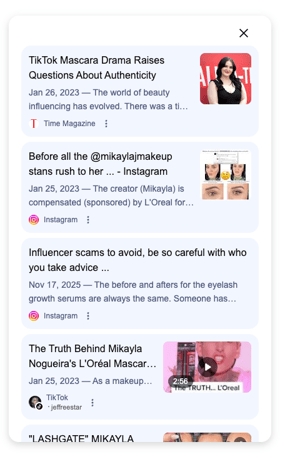

Let’s not forget the deception felt by beauty influencer Mikayla Nogueira’s community when she did a sponsored review for L'Oréal mascara and was supposedly wearing false lashes during it, leading to a massive discussion about authenticity.

Basically, when an influencer breaks their hard-earned trust, boy do they break it badly. Which is why AI-generated UGC hits a very specific nerve: it looks like the return of the town crier, but there’s no person behind the voice.

If the power of UGC comes from thousands of years of human-to-human persuasion, what happens when we quietly replace the human part?

Trust Was Already Cracking. AI Is Prying It Open

Consumers were already unsure about what to believe online. AI didn’t create that problem, but it poured gasoline on it.

A recent survey found that 76% of consumers are worried about misinformation from AI tools, and less than half feel confident telling human and AI content apart.

At the same time, law enforcement and policy groups are putting out warnings about synthetic media’s role in disinformation and fraud.

The FTC has made one thing very clear: if you use AI to endorse a product, the audience has to know it’s AI. That means obvious, unavoidable disclosure — not buried in a caption or whispered at the end. The same rules that apply to human influencers apply to synthetic ones.

The agency also bans a few predictable shortcuts:

- you can’t make exaggerated or unprovable claims with AI spokespeople,\

- you can’t generate fake AI “reviews,”

- and you can’t hide how the content was made or what data was used to train it.

In short, truth-in-advertising laws didn’t disappear just because the face on screen isn’t real. If AI is involved, people should be able to tell.

You can feel that anxiety in the comments section:

- Emotional story? “Is this AI?”

- Tearful confession? “Feels fake.”

- Too-perfect “customer” talking to camera? “This is giving AI.”

People don’t mind AI when it’s clearly used for entertainment - the cursed filters, wild animation, surreal visuals. That gets filed under “fun.”

They get angry when:

- The video feels heartfelt, then turns out to be synthetic

- The “relatable” creator turns out not to exist

- They realize the “customer” vouching for a product was never a customer at all

- When the AI sets an unrealistic standard that shoppers can’t meet or connect with

For example, when brands lean on AI to invent flawless, imaginary people and pass them off as the new ideal, the whole thing goes sideways. Take the Guess x Vogue campaign built on AI-generated models:

Some of the comments? See for yourself:

What this really does is push real people out of the frame and replace them with digitally perfected stand-ins. The result? A beauty standard that doesn’t exist, can’t exist, and leaves audiences chasing something manufactured rather than meaningful.

The Real Question Isn’t “Is AI UGC Ethical?”

“Is AI UGC ethical?” is too blunt. It puts everything under one umbrella. A better lens: Does this piece of AI content protect trust, or erode it?

There are uses that clearly protect it:

-

A virtual presenter walking through features on a product page, obviously synthetic and labeled

-

An AI host in a help center video where the audience understands what they’re seeing

-

AI-generated storyboards, hooks, and static mockups for internal testing

-

Those are tools. Nobody believes a cartoon is a real person, and a clearly synthetic avatar can sit in that same bucket.

Then there are uses that quietly chip away at trust:

- AI faces acting out “day in my life as a nurse / teacher / student / founder” content as if those lives are real

- AI testimonials styled as raw customer reviews

- “UGC-style” AI ads framed as personal experience: “I’ve been using this for three months and here’s what happened”

That’s impersonation, and audiences are already suspicious enough.

If a human creator has to disclose when something is sponsored, an AI persona pretending to be human shouldn’t get a free pass. Giving machines more room to mislead than real people is a weird hill to die on.

AI UGC Isn’t Replacing Influencers. It’s Setting the Stage for Their Comeback

If AI UGC keeps accelerating the way it has, 2026 is going to be a strange split-screen:

On one side, AI models will be indistinguishable from real creators — smart, funny, expressive, emotionally calibrated, and astonishingly good at mimicking “relatable” storytelling.

On the other side, we’re going to see a renewed hunger for real people. Not just online creators, but in-person connection, face-to-face selling, and creators that audiences know exist beyond the screen.

AI will flood the feed. Humans will become the filter.

And that’s why the influencer isn’t going anywhere. In fact, they’re about to matter more.

Influencers have something that AI can’t replicate. The ability to

- talk to their audience daily

- show their real lives, even the messy parts

- admit when something didn’t work for them

- show up at events, meetups, signings, conferences

- build community over years, not minutes

Influencers occupy the only territory that machines can’t enter: lived experience.

However, AI UGC Will Kill a Certain Kind of UGC

Let’s be honest: a lot of UGC is already fake-feeling. Scripted. Overdirected. Same format, same lines, same structure.

AI will wipe that out first. And that’s okay.

People are already losing patience with UGC that feels generic. Add “Is this AI?” on top of it, and the trust collapses instantly.

This gives creators an opportunity to step up their game and really stand out. AI will quickly ween out the creators who haven’t focused on trust as a core part of their strategy, and those with loyal followers who will stand by their every move.

What The AI -> Creator Flow Looks Like Now

Even if an AI-generated video catches someone’s attention, they don’t go:

“Cool, that’s enough. I trust this brand now.”

They go looking for a real person to confirm it.

The next steps are predictably human:

- “What do influencers I follow say about this?”

- “What do real reviews look like?”

- “Has anyone I trust actually tried this?”

AI UGC can create awareness, but awareness without trust doesn’t convert. If anything, AI makes the trust phase heavier.

So again, this leads back to the point of still needing real creators in your UGC mix to make AI even work in the first place. Awareness doesn’t get you very far as a brand if you don’t have strategies in place for the rest of the buying journey (hello marketing 101).

What To Do Versus What Not To Do With AI

You have two options: blend AI with traditional UGC, or go all-in on AI only.

The right play is to use AI to:

- generate 30 hooks

- test which angles resonate

- fill content gaps

- experiment in low-stakes environments

- produce explainers where nobody expects a human

- turn content around that hits on a timely trend at record speeds

Look at what Popeyes did this summer. They launched their Chicken Wraps, McDonald’s answered with their own version a day later, and Popeyes didn’t fire back with a press release — they dropped an AI-generated diss track.

The whole thing was produced in a matter of days using AI video and music tools, and it leans into pure absurdity: a tongue-in-cheek jab at their competitor with a tone that feels intentionally over the top.

It landed because it was quick, funny, and perfectly timed. A traditional production cycle would’ve taken weeks. AI let them turn a cultural moment into a punchline almost instantly.

And importantly, it wasn’t pretending to be anything it wasn’t. It’s comedy — not a fake testimonial, not a synthetic “customer,” just entertainment. That’s why it works.

Use AI to create entertainment and capture awareness. Then hand the winning ideas to real creators, the ones with communities and credibility.

This model works because it preserves what actually drives revenue: people believe people. On the other end, for those that go AI-only and replace humans entirely… Good luck.

They’ll ship AI “UGC” that pretends to be real testimonials, real stories, real customers. That content will backfire fast.

When consumers feel tricked, they don’t just distrust the ad. They distrust the brand.

-1.png)

.png?width=300&height=300&name=winter%20olympics%20(7).png)